Why is that?

The landscape of AI has exploded in complexity and as a result, the team that is needed to successfully design, develop, and implement an AI solution has grown from just data scientists to now include product managers, user experience designers, ethicists, and even more domain specialists.

As more professionals get involved in the decision making process, the greater the chance for misunderstandings and miscommunication. And as I pointed out in my ODSC West 2021 session, this growing gap in AI literacy is contributing to the high failure rate we’re seeing across AI projects today.

Continue reading to identify these communication gaps that lead to failure and how to improve AI literacy across your organization with design thinking.

As humans, we take for granted a lot of the intelligence building blocks we’ve naturally developed over the years. Usually without realizing it, humans have the ability to connect new concepts, in context, to other pieces of information we already know.

For example, we can quickly determine if an object is living, its own entity, or just a plain background. We can see a picture of a cat facing the side and another facing forward, and know that they are both cats.

Machine learning doesn’t work the same way, and won’t for some time.

Machines must train on hundreds, sometimes thousands, of photos to accurately identify the components of a cat. And yet, it may still not recognize a cat in a new background or from a different perspective.

Understanding how machines learn can help us better safeguard against bias and risk in AI solutions.

Oftentimes, society and data scientists alike picture a magic black box when they think of AI solutions—you input data and it automatically spits out a recommendation.

While many of us generally understand inputs, outputs, and that models need to be trained, we’re beginning to expect more from AI without understanding what’s happening under the hood.

To break down the complexity of your solution, and have better discussions around its value to your organization and others, start thinking in simpler subtasks, or building blocks.

For example, an algorithm that provides a customer experience specialist with next-best actions for a customer, based on feedback, may consist of the following related subtasks:

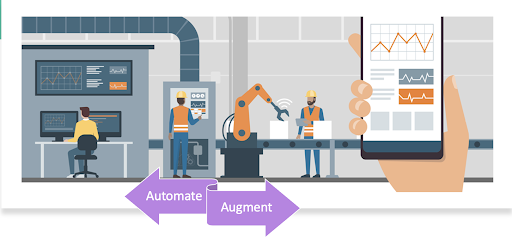

While AI can automate simple tasks, your team must realize that AI can also augment existing jobs to make them easier and more efficient.

What’s the difference between automation and augmentation?

Automation means that the machine has totally taken over the task and requires minimal human intervention. Augmentation means that humans are working closely with a machine to complete a task.

We get into trouble when we expect AI to automate jobs as opposed to automating specific tasks that still require human creativity. When we think about AI in terms of augmentation, it gives us an opportunity to successfully re-imagine human work without over-engineering a solution. This also sets us up for greater adoption success when a solution is launched.

If a human scored a 3/5 or even 4/5 on a test, we would consider the performance not so great. But for AI, that performance would be considered state-of-the-art in some cases.

We often think about model performance in terms of accuracy, or how often a model is right. However, we overlook other key performance indicators: how often we learned new information from the model, how often the model led to a biased outcome, or the cost to retrain a model on new data. The list goes on.

Interpreting AI performance and outputs takes quite a bit of training, but what your organization can do is work with your data scientists to set realistic business KPIs connected to the model’s performance beyond accuracy.

The lack of AI literacy in today’s organizations presents a major hurdle to successfully designing and deploying Trustworthy AI solutions. To overcome these communication gaps and achieve success with AI, you must implement design thinking in every step of the process.

Design thinking allows teams to build solutions based on observed human needs and values, rather than assumed needs and values. Each step of the AI design and development process shown above allows team members time to consider the solution they’re building, and its value to the organization and its stakeholders.

Often stakeholders are unsure how to articulate their needs, and data scientists don’t have a firm grasp on the domain. Therefore, it becomes extra important to engage in these conversations regularly and collaboratively.

You can take the following steps, inspired by design thinking, to dramatically reduce the risk of your next AI project.

Over the years, AI projects have seen incredibly low success rates. And as the AI landscape continues to evolve, communication gaps will only worsen. However, organizations can avoid project failure by implementing design thinking. Have questions? Feel free to reach out to our team and we’d be happy to chat.

Stay up-to-date on the latest in trusted AI and data science by subscribing to our Voices of Trusted AI monthly digest. It’s a once-per-month email that contains helpful trusted AI resources, reputable information and actionable tips straight from data science experts themselves.

Cal Al-Dhubaib is an AI Evangelist and CEO of Pandata.

© 2024 All Rights Reserved • Privacy Policy